Vanishing and Exploding Gradients in Neural Network Models: Debugging, Monitoring, and Fixing - neptune.ai

Analysis of Gradient Clipping and Adaptive Scaling with a Relaxed Smoothness Condition | Semantic Scholar

What is Gradient Clipping?. A simple yet effective way to tackle… | by Wanshun Wong | Towards Data Science

Stability and Convergence of Stochastic Gradient Clipping: Beyond Lipschitz Continuity and Smoothness: Paper and Code - CatalyzeX

Deep-Learning-Specialization/Dinosaurus_Island_Character_level_language_model_final_v3a.ipynb at master · gmortuza/Deep-Learning-Specialization · GitHub

Analysis of Gradient Clipping and Adaptive Scaling with a Relaxed Smoothness Condition | Semantic Scholar

What is Gradient Clipping?. A simple yet effective way to tackle… | by Wanshun Wong | Towards Data Science

Effect of weight normalization and gradient clipping on Google Billion... | Download Scientific Diagram

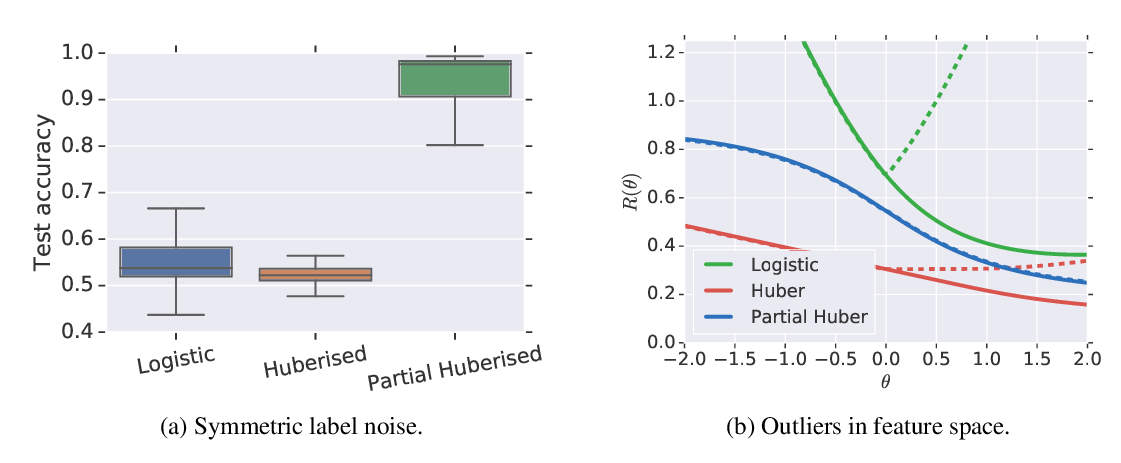

![Daniel Jiwoong Im on Twitter: ""Can gradient clipping mitigate label noise?" A: No but partial gradient clipping does. Softmax loss consists of two terms: log-loss & softmax score (log[sum_j[exp z_j]] - z_y) Daniel Jiwoong Im on Twitter: ""Can gradient clipping mitigate label noise?" A: No but partial gradient clipping does. Softmax loss consists of two terms: log-loss & softmax score (log[sum_j[exp z_j]] - z_y)](https://pbs.twimg.com/media/EWsqtbpUcAIr9d8?format=jpg&name=large)

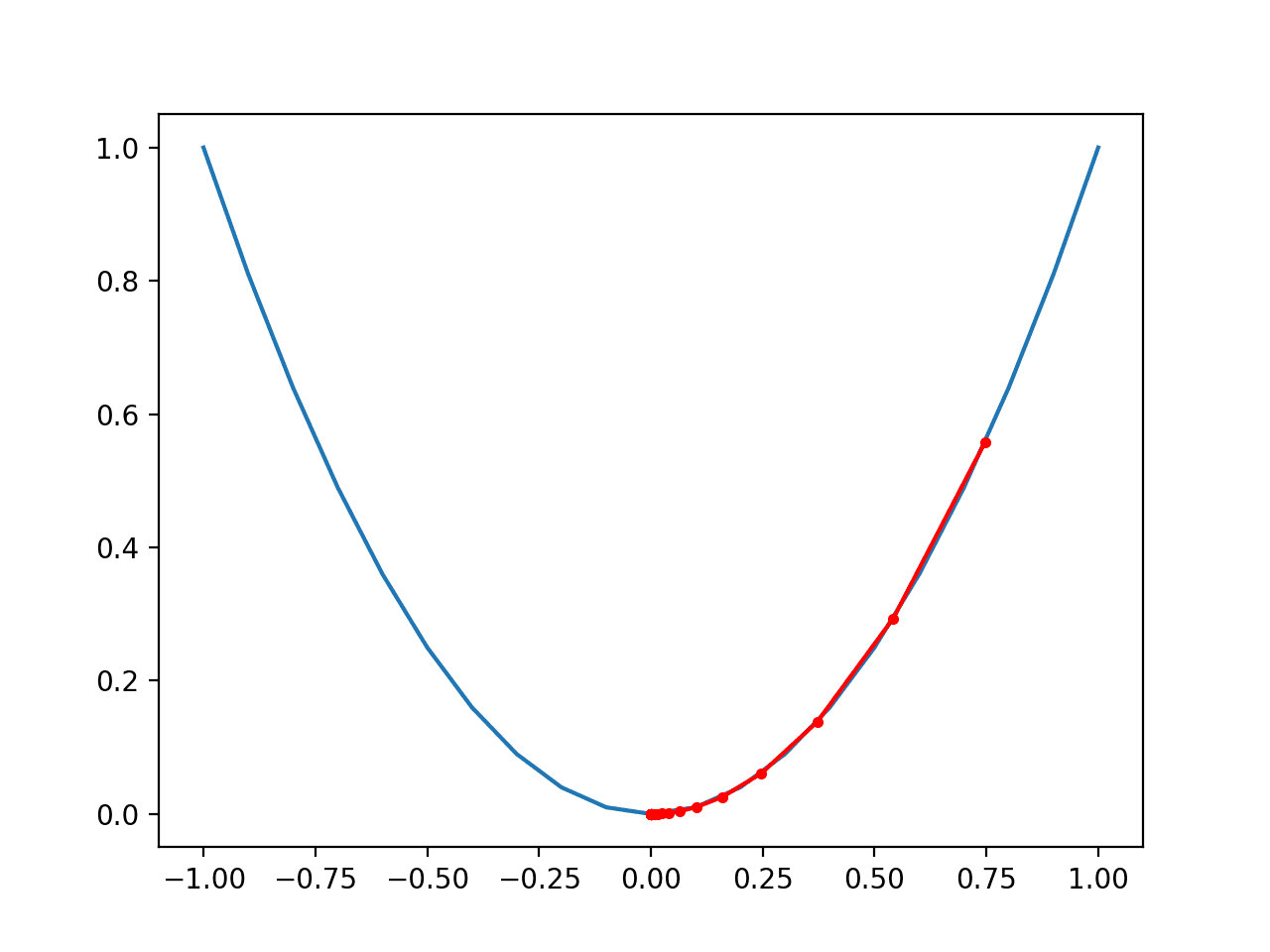

![Cliffs and exploding gradients - Hands-On Transfer Learning with Python [Book] Cliffs and exploding gradients - Hands-On Transfer Learning with Python [Book]](https://www.oreilly.com/api/v2/epubs/9781788831307/files/assets/7da599f5-2250-4acf-b6ef-e8dd7d188957.png)